-

ORACLE 19c RAC local listener supports no services error

Hi Readers, in this blogpost i am sharing steps to follow in order to resolve local listener issue (unable to connect to db through local physical node ip as no services showing reg. in local listener ) in a 2 NODE RAC setup.

User was complaining of below error while using dblink (which was using physical node ip/local listener for connection) – ORA-12514: TNS:listener does not currently know of service requested in connect descriptor

Connections through scan ip was working fine, and connections to 2nd cluster node also worked fine. But no services showing registered in 1st node local listener as shown below . ALTER SYSTEM REGISTER from sqlplus also didnt fix issue.

On checking local listener parameter – it is set to vips of both nodes as in an ideal RAC env.

Let us check VIP stats from crsctl if they are both online –

as shown below VIP is in intermediate state.

we need to shutdown and start vip from oracle user.

srvctl stop vip -vip drpadb01

check vip is offline now.

start vip – srvctl start vip -vip drpadb01

check status now of both vips –

now let us check service status in local listener. db services registered now with listener.

-

19C CDB STANDBY DATABASE OUT OF SYNC DUE TO ORA-15055 & ORA-00020

Hi Readers, in this blogpost i am sharing steps to fix DR (standby database) sync issue due to below error –

Error –

2023-04-17T07:41:29.364469+05:30

Errors in file /u02/app/oracle/diag/rdbms/drpstgdb/PSTMDB/trace/PSTGDB_pr0q_15533286.trc:

ORA-01115: IO error reading block from file 173 (block # 3383745)

ORA-01110: data file 173: ‘+DATA/DRPSTMDB/ED6970942CDE017CE0530A3A03A112D3/DATAFILE/user.758.1126160105’

ORA-15055: unable to connect to ASM instance

ORA-15055: unable to connect to ASM instance

ORA-00020: maximum number of processes (120) exceededSYNC status –

set lines 250 pages 250

col HOST_NAME for a15

col DB_Start_Time for a20

alter session set nls_date_format=’DD-MON-YYYY HH24:MI:SS’;

SELECT NAME as DB_NAME,instance_name,OPEN_MODE,HOST_NAME,PROTECTION_MODE,database_role,logins,to_char(startup_time,’DD-MON-YYYY HH24:MI’) DB_Start_Time FROM

gV$INSTANCE,v$database;

select sysdate from dual;

select primary.thread#,

primary.maxsequence primaryseq,

standby.maxsequence standbyseq,

primary.maxsequence – standby.maxsequence gap

from ( select thread#, max(sequence#) maxsequence

from v$archived_log

where archived = ‘YES’

and resetlogs_change# = ( select d.resetlogs_change# from v$database d )

group by thread# order by thread# ) primary,

( select thread#, max(sequence#) maxsequence

from v$archived_log

where applied = ‘YES’

and resetlogs_change# = ( select d.resetlogs_change# from v$database d )

group by thread# order by thread# ) standby

where primary.thread# = standby.thread#;

!date

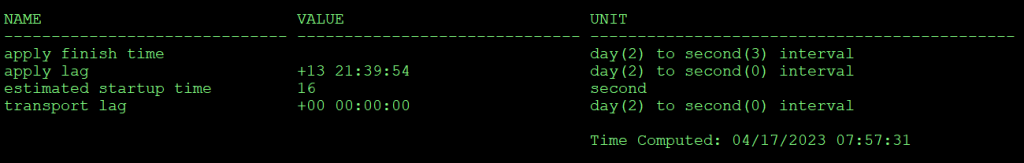

CHECK TRANSPORT AND APPLY LAG –

query –

col name for a30

col value for a30

select

NAME Name,

VALUE Value,

UNIT Unit

from v$dataguard_stats

union

select null,null,’ ‘ from dual

union

select null,null,’Time Computed: ‘||MIN(TIME_COMPUTED)

from v$dataguard_stats;Here transport lag is 0 and apply lag is 21 Hrs, so issue is local to standby db system.

STOP/start of MRP did not help. and MRP was terminating due to below error –

Background Media Recovery process shutdown (PSTGDB)

2023-04-17T08:29:27.666756+05:30

Shutting down ORACLE instance (abort) (OS id: 59900504)

2023-04-17T08:29:27.668536+05:30

Shutdown is initiated by oraagent.bin@ (TNS V1-V3).

License high water mark = 104solution –

We need to check max process utilisation of asm instance and increase process limit.

select * from v$resource_limit

where RESOURCE_NAME = ‘processes’;

STOP MRP APPLY –

SQL> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE CANCEL;

Database altered.

STOP DATABASE –

bash-4.4$ srvctl stop database -d DRPSTMDB

login as grid user – login to sqlplus as sysasm (not sysdba to change asm paramters).

alter system set processes=360 scope=spfile;

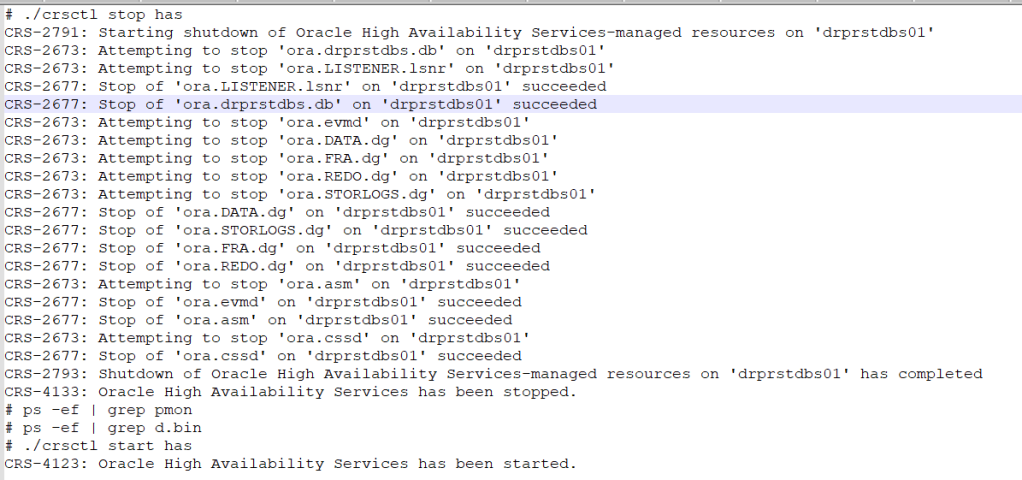

stop start crs/asm instance

verify process change post startup –

start the db instance and start MRP process.

srvctl start database -d DRPSTMDB -o “read only” (as this is an active dataguard standby open for read-only queries).

DGMGRL> EDIT DATABASE drpstmdb SET STATE=’APPLY-ON’;

Succeeded.check gap after sometime. Issue resolved now as gap has reduced.

-

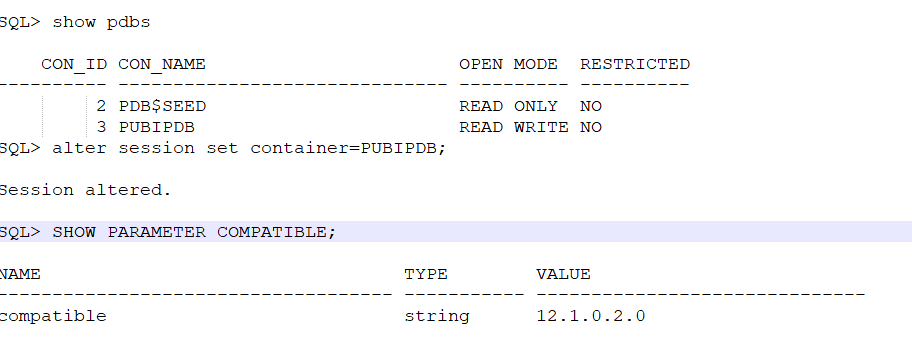

EXPDP/IMPDP FROM 19C TO 12CR1 PDB using remap and version options

Hi Readers, in this blogpost i am sharing steps of how you can export a table from a 19c PDB database to a 12.1 PDB database where source schema,table name and tablespace name will be re-mapped at target. Also, we will use advanced compression while taking export backup.

PFB SUMMARY OF SOURCE AND TARGET –

- SOURCE PDB DETAILS –

CHECK TARGET PDB COMPATIBLE –

if compatible is not same then higher db version export backups cannot be imported into lower db version and below error is observed. However you can export from lower to higher without setting any version during bkp.

2. check source table size AND tablespace-

SELECT SEGMENT_NAME,OWNER,ROUND(BYTES/1024/1024/1024,2) GB,SEGMENT_TYPE,TABLESPACE_NAME FROM DBA_SEGMENTS WHERE

SEGMENT_NAME IN (‘S_ORDER’)If source table has indexes also, then remap index tablespaces as well if same name tablespaces not available at target.

for PDBs export/import create a pdb service entry in tns files and use same to connect to pdb.

service can be created as follows-

3. Now lets take the export backup. As table size is large we will use compression for both data and metadata (compress=all) to reduce dumpfile size (use only if you have advanced compression license ). exclude statistics of table and gather stats at target once import is complete (stats import otherwise take considerable time so this is better option).

Also use parallel option if using enterprise edition to reduce backup time. (as per available cpu and free memory).

Always use a parfile as it can be reused if any error occurs –

run expdp in background as – nohup expdp parfile=exp.par &

import logs –

Export: Release 19.0.0.0.0 – Production on Wed Apr 12 10:07:32 2023

Version 19.17.0.0.0Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved.

;;;

Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 – Production

;;; ****

;;; Parfile values:

;;; parfile: exclude=STATISTICS

;;; parfile: version=12.1

;;; parfile: compression=all;;; parfile: dumpfile=exp_S_ORDER_12Apr2023_%U.dmp

;;; parfile: logfile=EXPORT_S_ORDER_12APR23.log

;;; parfile: tables=SIEBEL.S_ORDER

;;; parfile: cluster=N

;;; parfile: parallel=24

;;; parfile: directory=exp

;;; parfile: userid=dbadmin/@PARCHPDB

;;; * Starting “DBADMIN”.”SYS_EXPORT_TABLE_03″: dbadmin/*@PARCHPDB parfile=exp.par

Processing object type TABLE_EXPORT/TABLE/TABLE_DATA

Processing object type TABLE_EXPORT/TABLE/INDEX/INDEX

Processing object type TABLE_EXPORT/TABLE/GRANT/OWNER_GRANT/OBJECT_GRANT

Processing object type TABLE_EXPORT/TABLE/TABLE

. . exported “SIEBEL”.”S_ORDER” 64.89 GB 673703764 rows

Master table “DBADMIN”.”SYS_EXPORT_TABLE_03″ successfully loaded/unloaded

Dump file set for DBADMIN.SYS_EXPORT_TABLE_03 is:

/u03/exp/exp_S_ORDER_12Apr2023_01.dmp

/u03/exp/exp_S_ORDER_12Apr2023_02.dmp

/u03/exp/exp_S_ORDER_12Apr2023_03.dmp

/u03/exp/exp_S_ORDER_12Apr2023_04.dmp

/u03/exp/exp_S_ORDER_12Apr2023_05.dmp

/u03/exp/exp_S_ORDER_12Apr2023_06.dmp

/u03/exp/exp_S_ORDER_12Apr2023_07.dmp

/u03/exp/exp_S_ORDER_12Apr2023_08.dmp

/u03/exp/exp_S_ORDER_12Apr2023_09.dmp

/u03/exp/exp_S_ORDER_12Apr2023_10.dmp

/u03/exp/exp_S_ORDER_12Apr2023_11.dmp

/u03/exp/exp_S_ORDER_12Apr2023_12.dmp

/u03/exp/exp_S_ORDER_12Apr2023_13.dmp

/u03/exp/exp_S_ORDER_12Apr2023_14.dmp

/u03/exp/exp_S_ORDER_12Apr2023_15.dmp

/u03/exp/exp_S_ORDER_12Apr2023_16.dmp

/u03/exp/exp_S_ORDER_12Apr2023_17.dmp

/u03/exp/exp_S_ORDER_12Apr2023_18.dmp

/u03/exp/exp_S_ORDER_12Apr2023_19.dmp

/u03/exp/exp_S_ORDER_12Apr2023_20.dmp

/u03/exp/exp_S_ORDER_12Apr2023_21.dmp

/u03/exp/exp_S_ORDER_12Apr2023_22.dmp

/u03/exp/exp_S_ORDER_12Apr2023_23.dmp

/u03/exp/exp_S_ORDER_12Apr2023_24.dmp

/u03/exp/exp_S_ORDER_12Apr2023_25.dmp

Job “DBADMIN”.”SYS_EXPORT_TABLE_03″ successfully completed at Wed Apr 12 10:27:43 2023 elapsed 0 00:20:07SCP the dumpfiles to target machine backup mountpoint (where datapump) directory is also created).

3. Check target schema default tablespace and related tablespace free space. atleast 20% extra space should be available as incase of import, table size may increase/decrease depending on datatype and db version and compression settings. In our case we added space to make free space upto 600 GB. if you dont want import to fail due to space issue then set resumable timeout parameter at target ( in seconds), so imp will wait till space is added. ALTER SYSTEM SET RESUMABLE_TIMEOUT=1800;

4. Now let us import the table using parfile. – nohup impdp parfile=imp.par &

Connected to: Oracle Database 12c Enterprise Edition Release 12.1.0.0.0 – Production ;;; ************************************************************************** ;;; Parfile values: ;;; parfile: table_exists_action=SKIP ;;; parfile: remap_table=S_ORDER:S_ORDER_NEW ;;; parfile: remap_tablespace=SIEBEL_DATA:ADHOC_SIEBEL_DATA ;;; parfile: remap_schema=SIEBEL:SIEBEL_ADHOC ;;; parfile: dumpfile=exp_S_ORDER_12Apr2023_%U.dmp ;;; parfile: logfile=IMPORT_S_ORDER_12APR23.log ;;; parfile: tables=SIEBEL.S_ORDER ;;; parfile: cluster=N ;;; parfile: parallel=24 ;;; parfile: directory=exp12mar ;;; parfile: userid=dbadmin/********@PUBIPDB ;;; ************************************************************************** Master table “DBADMIN”.”SYS_IMPORT_TABLE_01″ successfully loaded/unloaded Starting “DBADMIN”.”SYS_IMPORT_TABLE_01″: dbadmin/********@PUBIPDB parfile=imp.par Processing object type TABLE_EXPORT/TABLE/TABLE Processing object type TABLE_EXPORT/TABLE/TABLE_DATA . . imported “SIEBEL_ADHOC”.”S_ORDER_NEW” 64.89 GB 673703764 rows

Gather Table stats post import – (use higher degree for faster response).

exec dbms_stats.gather_table_stats(ownname => ‘SIEBEL_ADHOC’,tabname => ‘S_ORDER_NEW’,method_opt =>’FOR ALL COLUMNS SIZE AUTO’,degree => 32,cascade =>true, no_invalidate=> TRUE, granularity=> ‘AUTO’,options=>’GATHER AUTO’);

check table at target. (match rows during export/import).

In our case table size has increased post import as in source compression was enabled at tablespace level, but not enabled at target.

-

insert statement suspended, wait error to be cleared

Hi Readers, in this blogpost i am sharing a wait event/error encountered during an insert statement execution on a partitioned table.

The statement was a simple insert statement, but it remained suspended without any insertion.

query – INSERT INTO USER.T1(

|ROW_ID,

|IF_ROW_STAT,

|IF_ROW_BATCH_NUM,

|LD_LEAD_NUM,

|NLD_NOTE,

|NLD_PRIV_FLG,

|NLD_NOTE_TYPE,

|X_NLD_PROPOSAL_ID,

|ARCH_TIMESTAMP

|)

|SELECT

|ROW_ID,

|IF_ROW_STAT,

|IF_ROW_BATCH_NUM,

|LD_LEAD_NUM,

|to_lob(NLD_NOTE),

|NLD_PRIV_FLG,

|NLD_NOTE_TYPE,

|X_NLD_PROPOSAL_ID,

|SYSTIMESTAMP

|FROM USER2.EIM_LEAD WHERE IF_ROW_BATCH_NUM BETWEEN 51 AND 100on checking the alert logs found below message –

2023-01-06T20:32:59.920380+05:30

PSBLPDB(3):statement in resumable session ‘User USER(110), Session 7567, Instance 3’ was suspended due to

SBLPDB(3): ORA-01536: space quota exceeded for tablespace ‘USER_DATA’so the reason was that all partitions of this table were moved to new tablespace but table’s default tablespace was still showing old tablespace as evident from table ddl.

CREATE TABLE “USER”.”T1″

( “ROW_ID” VARCHAR2(15 CHAR),

“IF_ROW_STAT” VARCHAR2(30 CHAR),

“IF_ROW_BATCH_NUM” NUMBER(15,0),

“LD_LEAD_NUM” VARCHAR2(30 CHAR),

“NLD_NOTE” VARCHAR2(3000 CHAR),

“NLD_PRIV_FLG” VARCHAR2(5 CHAR),

“NLD_NOTE_TYPE” VARCHAR2(15 CHAR),

“X_NLD_PROPOSAL_ID” VARCHAR2(15 CHAR),

“ARCH_TIMESTAMP” DATE

) PCTFREE 10 PCTUSED 40 INITRANS 1 MAXTRANS 255

STORAGE(

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE “EIMUSER_DATA”

PARTITION BY RANGE (“ARCH_TIMESTAMP”) INTERVAL (INTERVAL ‘1’ MINUTE)

(PARTITION “PART_ARC_EIM_LEAD_EPROPSL” VALUES LESS THAN (TO_DATE(‘ 2021-06-30 00:00:00’, ‘SYYYY-MM-DD HH24SS’, ‘NLS_CALENDAR=GREGORIAN’)) SEGMENT CREATION DEFERRED

PCTFREE 10 PCTUSED 40 INITRANS 1 MAXTRANS 255

ROW STORE COMPRESS ADVANCED LOGGING

STORAGE(

BUFFER_POOL DEFAULT FLASH_CACHE DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE “USER_DATA1” ) ;the user quota from old tablespace was removed as old tablespace was planned to be dropped. so we need to modify this partitioned table ddl in order that new partitions are created in new tablespace.

in order to resolve such issues –

use below command –

ALTER TABLE USER.TABLE_NAME MODIFY DEFAULT ATTRIBUTES TABLESPACE NEW_TABLESPACE;

-

CHECK TABLE PROPERTIES USING SQL DEVELOPER.

Hello Readers, In this blogpost i am sharing how to use sql developer to quickly check a table details and save its ddl.

CLICK ON VIEW AND THEN GO TO FIND DB OBJECT

CHOOSE YOUR DATABASE CONNECTION, SCHEMA NAME, OBJECT TYP/NAME. IF YOU HAVE ONLY ONE TABLE WITH THIS NAME IN ENTIRE DB THEN JUST TYPE THE OBJECT NAME AND HIT GO.

YOU CAN SEE THIS TABLE WAS FOUND IN 2 DIFFERENT SCHEMAS –

NOW CLICK ON TABLE NAME UNDER THE SCHEMA NAMES WHOSE DETAILS YOU NEED AND GET THE DETAILS OF TABLE LIKE BELOW

DETAILS OF COLUMNS IN THE TABLE –

YOU CAN ALSO CHECK MODEL SECTION FOR BETTER OUTPUT OF COLUMNS –

CHECK STATS OF TABLE AS BELOW –

DETAILS SECTION GIVES FURTHER USEFUL DETAILS REGARDING THE TABLE –

YOU CAN QUICKLY COPY TABLE DDL TO CREATE IN SOME OTHER SCHEMA –

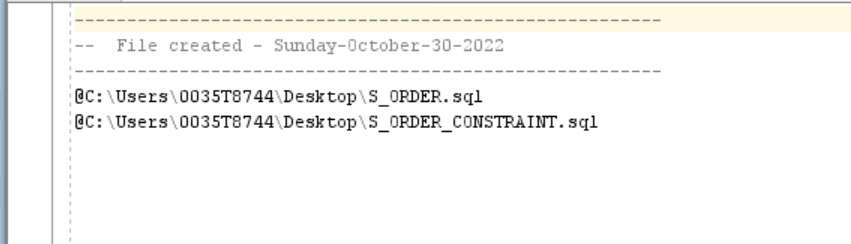

YOU CAN ALSO CLICK ON ACTION CHOOSE EXPORT TO EXPORT DDL OF TABLE – (UNTICK EXPORT DATA)

A NOTEPAD FILE WILL BE GENERATED CONTAINING TABLE DDL ALSO A SEPARATE CONSTRAINT FILE AND INDEX FILE WILL BE GENERATED (DEPENDS IF THESE EXIST FOR THE TABLE). IN MY CASE TABLE HAD NO INDEXES.

-

CHANGE REDOLOGS AND CONTROLFILE DISKGROUP LOCATION IN ORACLE 19C CDB POST DATABASE CREATION.

Hi Readers, in this blogpost i am sharing steps on how you can change redologs and controlfiles location post db creation if they have been placed mistakenly in wrong diskgroup or redo log size is not as per requirement.

In my case, we had selected recovery area as +FRA diskgroup and dbca created redologs in DB create file dest DATA and FRA in typical installation mode , as it does not ask specifically for redologs location in this creation mode.

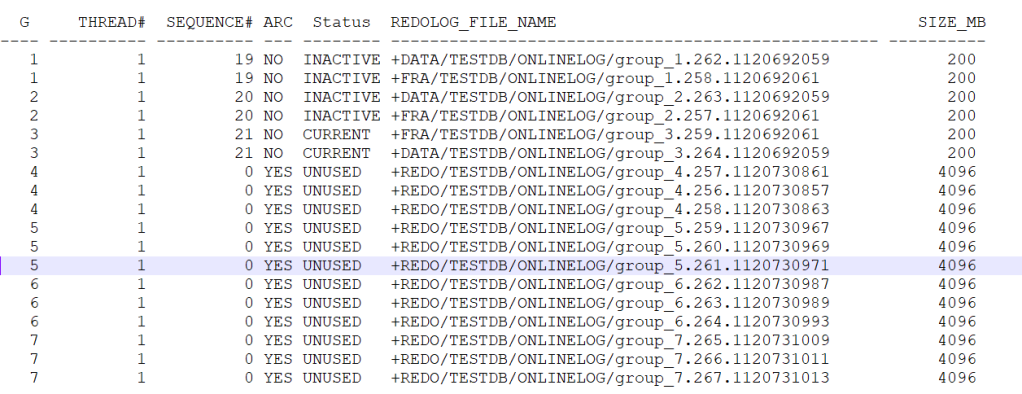

REDOLOGS location and size post installation –

column REDOLOG_FILE_NAME format a50;

set lines 1000

SELECT a.GROUP#, a.THREAD#, a.SEQUENCE#,

a.ARCHIVED, a.STATUS, b.MEMBER AS REDOLOG_FILE_NAME,

(a.BYTES/1024/1024) AS SIZE_MB FROM v$log a

JOIN v$logfile b ON a.Group#=b.Group#

ORDER BY a.GROUP#;

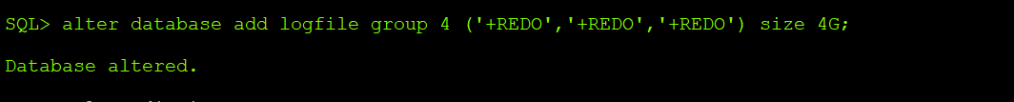

So in order to drop these small sized redo logs in FRA and REDO and re-create with 4GB size in REDO diskgroup, i had performed following steps –

- Add new groups 3 members and 4 GB each size. ensure diskgroup has sufficient space before creation.

Now we have old group with 2 members and 200 MB size and newly created groups with 3 members and 4GB each size.

for dropping old redolog groups make them inactive by switching logfiles and then drop them.

FINAL EXPECTED RESULT –

Similarly lets check how to move controlfiles location to different diskgroup –

current location –

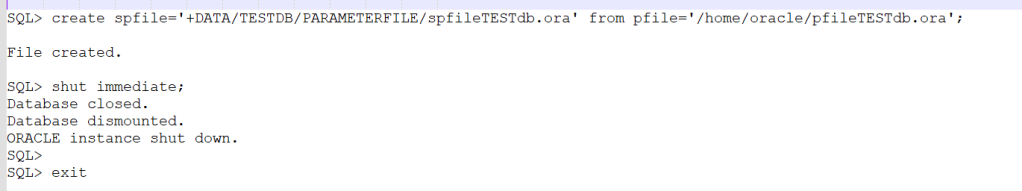

create a pfile from spfile and save the original pfile, perform edits in a new pfile to avoid any issues due to wrong edit.

now shutdown the database

edit controlfile values as below in pfile

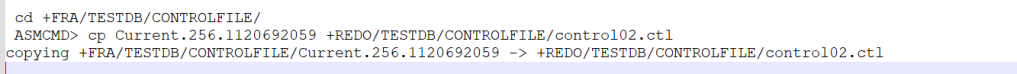

login as grid user and login to asmcmd

GO TO REDO DISKGROUP AND CREATE CONTROLFILE DIRECTORY TO PLACE THE CONTROLFILE FROM FRA DISKGROUP

You may encounter below error if you try to copy with same filename. so we will rename the controlfile.

so copy with new file name as follows –

delete the old FRA controlfile (you may perform this step later after testing db startup).

ASMCMD> rm Current.256.1120692059

new controlfile will now show as follows –

ASMCMD> cd +REDO/TESTDB/CONTROLFILE

ASMCMD> ls -lrt

WARNING: option ‘r’ is deprecated for ‘ls’

please use ‘reverse’Type Redund Striped Time Sys Name

CONTROLFILE UNPROT FINE NOV 14 11:00:00 N control02.ctl => +REDO/ASM/CONTROLFILE/control02.ctl.268.1120736603similarly to maintain uniformity in controlfile naming I have renamed DATAdiskgroup controlfile as follows –

ASMCMD> cd +DATA/TESTDB/CONTROLFILE

ASMCMD> ls -lrt

WARNING: option ‘r’ is deprecated for ‘ls’

please use ‘reverse’Type Redund Striped Time Sys Name

CONTROLFILE UNPROT FINE NOV 14 11:00:00 Y Current.261.1120692059

ASMCMD> cp Current.261.1120692059 control01.ctl

copying +DATA/TESTDB/CONTROLFILE/Current.261.1120692059 -> +DATA/TESTDB/CONTROLFILE/control01.ctl

ASMCMD> ls -lrt

WARNING: option ‘r’ is deprecated for ‘ls’

please use ‘reverse’Type Redund Striped Time Sys Name

CONTROLFILE UNPROT FINE NOV 14 11:00:00 Y Current.261.1120692059

CONTROLFILE UNPROT FINE NOV 14 11:00:00 N control01.ctl => +DATA/ASM/CONTROLFILE/control01.ctl.264.1120736683

ASMCMD> rm Current.261.1120692059Now startup database with pfile and create a spfile from it.

now perform final startup.if database opens without errors and location is as expected then you are good to go.

-

USE COMPRESSION FOR TABLE/INDEXES IN 12.1.0.2.0 PDB

Hi Readers, in this blogpost i am sharing steps to enable compression for tables/indexes with minimal DML (INSERT/UPDATE/DELETE) activity in order to reclaim tablespace free space in a WAREHOUSE environment.

Note – Please check your licensing for using compression features (only available in Enterprise Edition).

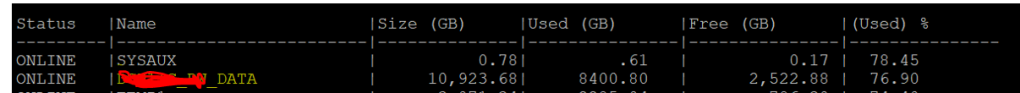

Here, we had moved 21 tables in same tablespace with compress option –

TABLESPACE stats before the activity – (free space 2522 GB)

TABLESPACE stats post activity – (3 TB APPROX FREE SPACE WAS RECLAIMED)

I am only showing here relative gain for tables – (remaining space gain was achieved from index rebuild)

STEPS –

- DISABLE ARCHIVELOGGING IF POSSIBLE (STANDALONE DEV. ENV.) FOR THIS ACTIVITY TO AVOID HIGH ARCHIVE GENERATION).

- SAVE STATS OF TABLE/INDEX SIZES PRE-ACTIVITY TO COMPARE GAIN.

- MOVE THE TABLE USING PARALLEL SESSIONS TO SAVE TIME.

- USE SHELL SCRIPT TO RUN THE OPERATION IN NOHUP.

- Remember to rebuild indexes of related tables otherwise they will remain unusable.

SHELL SCRIPT EXAMPLE –

export ORACLE_SID=DB_INSTANCE_NAME

export ORACLE_BASE=/u02/app/oracle/product

export ORACLE_HOME=/u02/app/oracle/product/12.1.0/dbhome_1

export PATH=$PATH:$ORACLE_HOME/bin:$ORACLE_HOME/OPatch

NOW=$(date +”%m-%d-%Y”)

/u02/app/oracle/product/12.1.0/dbhome_1/bin/sqlplus “/ as sysdba” << EOF

ALTER SESSION SET CONTAINER=PDBNAME;

@/home/oracle/table_compress_reorg_2.sql

exit

EOFsql file code –

spool /home/oracle/table_compress_reorg_181022_2.log

set echo on

set time on

set timing on

set feedback on

column owner format a30

column segment_name format a40

column segment_type format a30

set lines 1000 pages 1000

!date

select owner,segment_name,segment_type,bytes/(1024*1024*1024)”Size(GB)” from dba_segments where segment_name in(‘TABLE_NAME’);

alter table OWNER.TABLE_NAME enable row movement;

alter table OWNER.TABLE_NAME move tablespace TBSNAME compress parallel 32;

ALTER TABLE OWNER.TABLE_NAME NOPARALLEL;

ALTER TABLE OWNER.TABLE_NAME disable ROW MOVEMENT;

select owner,segment_name,segment_type,bytes/(1024*1024*1024)”Size(GB)” from dba_segments where segment_name in(‘TABLE_NAME’);

Prompt “OWNER.TABLE_NAME reorg completed”–FOR INDEXED TABLES

alter index OWNER.INDEX_NAME rebuild parallel 32 compress;

alter index OWNER.INDEX_NAME noparallel;Now run the shell script in nohup –

nohup sh scriptname.sh &

In order to check which table/indexes have been compressed – (COMPRESSION COLUMN WILL SHOW ENABLED)

set lines 300 pages 200

col owner for a13

col index_name for a30

col table_name for a40

col tablespace_name for a20

SELECT INDEX_NAME,TABLESPACE_NAME,status,TABLE_NAME,PARTITIONED,COMPRESSION,INI_TRANS,DEGREE,OWNER FROM DBA_INDEXES WHERE TABLE_NAME IN (NAMES) ORDER BY 1;set lines 300 pages 200

col table_name for a30

col TABLESPACE_NAME for a20

COL OWNER FOR A12

col compression for a11select table_name,INI_TRANS,owner,tablespace_name,degree,PARTITIONED,row_movement,COMPRESSION from dba_tables where

table_name IN (NAMES) ORDER BY 1;Below are some suggestions found in oracle support docs regarding compression implementation –

-

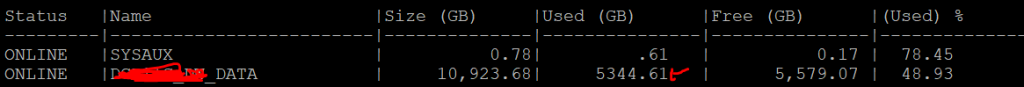

MAX DB_FILES VALUE REACHED IN 12c CDB CONTROLFILE

Hi Readers, In this blogpost i am sharing a scenario observed in one of our large DATA WAREHOUSE environments.

Number of datafiles reached its maximum value in the database.

DB VERSION – 12.1

PDBS – 1 APP + PDB$SEED

DB size is approx 123 TB

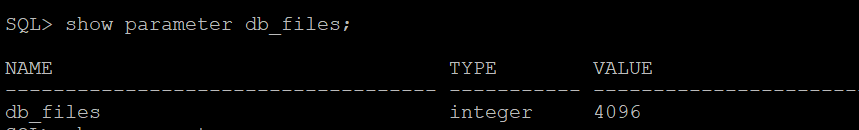

DB_FILES parameter in controlfile was set to 4096 and it reached the limit as shown below – (3967+126+2)= 4095.

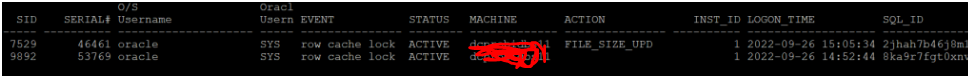

Interestingly, we could not figure out the issue, as when we tried to add the datafile the session got stuck without any error for hours.

There was no error in alert logs as well. session kept showing row cache lock wait event.

DATAFILE COUNT IN CDB,PDB AND SEED

current value in controlfile Issue was verified when we tried to create a new tablespace –

We received below POA from ORACLE SUPPORT –

1.make sure the standby DB_FILES is same as primary.

2. If you increase the value of DB_FILES, then you must shut down and restart all instances accessing the database before the new value can take effect.

3.If you have a primary and standby database, then they should have the same value for this parameter.DB_FILES value should be same in all the instances in a RAC environment

4. cannot do a rolling bounce. The bottom line is cannot have instances with different setting.

So, the steps are:

a)SQL> alter system set db_files=x scope=spfile; <<< this set the parameter in spfile and set the next startup.

b)You can shutdown the instance one by one, until ALL instances are down.Then can start one by one, or startup the database.DB_FILES max value is also OS dependent so please check respective OS installation guide for details.

We raised it to 6000 as shown below –

References –

10gR2 New Feature: Eliminate Control File Re-Creation ( Doc ID 329981.1 )

HOW TO CHANGE MAXDATAFILES, MAXLOGFILES AFTER CREATING DATABASE ( Doc ID 1008318.6 )

-

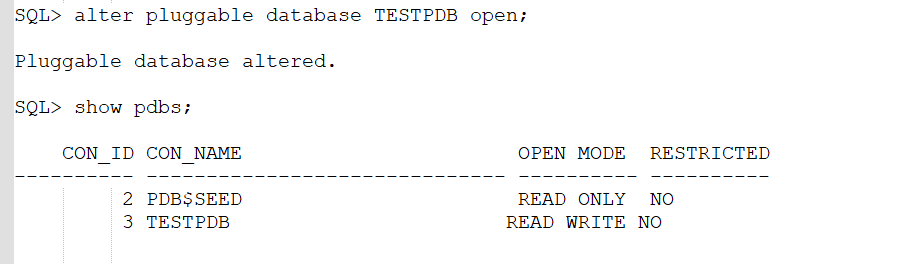

ORACLE 12CR2 PDB IN RESTRICTED MODE DUE TO FAILED DATAPATCH APPLY

Hi Readers, In this blogpost i am sharing issue related to DB PSU PATCH apply due to which PDB went into restricted mode and steps to resolved the same.

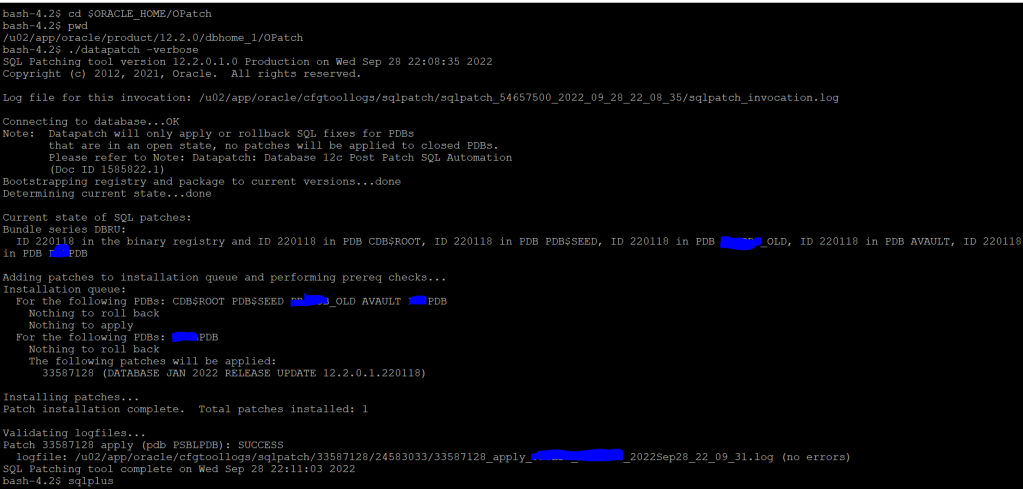

We applied JAN 2022 PSU on single node 12cR2 CDB with 5 PDBS.

All PDBs worked fine for 10 days post patch apply. However,

one of the app PDBs went into restricted mode when we restarted the database after 10 days.

We received below PDB violation for that PDB

SET LINESIZE 200

COLUMN time FORMAT A30

COLUMN name FORMAT A30

COLUMN cause FORMAT A30

COLUMN message FORMAT A30SELECT time, name, cause, message,STATUS

FROM pdb_plug_in_violations

WHERE type=’ERROR’ and time > TRUNC(SYSTIMESTAMP)

ORDER BY time;

We tried to manually apply datapatch but it failed.

We also tried to apply DATAPATCH on only the problematic PDB but it failed.

Registry components of ALL PDBS were valid including restricted PDB

We sought suggestion from ORACLE and they asked to re-create below registry for problematic PDB

Connect to PDB

SQL> alter session set container=PDBNAME;– Take the backup of registry$sqlpatch.

SQL> create table registry$sqlpatch_org as select * from registry$sqlpatch ;– Drop the table registry$sqlpatch.

SQL> exec dbms_pdb.exec_as_oracle_script(‘drop table registry$sqlpatch’);– Re-create the table by running the script.

SQL> @$ORACLE_HOME/rdbms/admin/catsqlreg.sql– Retry datapatch.

Now we re-ran datapatch

This time patch applied successfully for restricted PDB and we closed and opened the PDB to move it out of restrict mode.

We re-verified violation and its status shows resolved now.

-

SQL DEVELOPER DATABASE REPORT GENERATION

Hi Readers, in this blogpost i am sharing use case of SQL DEVELOPER to generate reports related to database activity –

sql developer version – 21.4.2.018

db version – 12cR2

For example – If we want to monitor inactive/active sessions in real-time and also share report snaps every hour to our team.

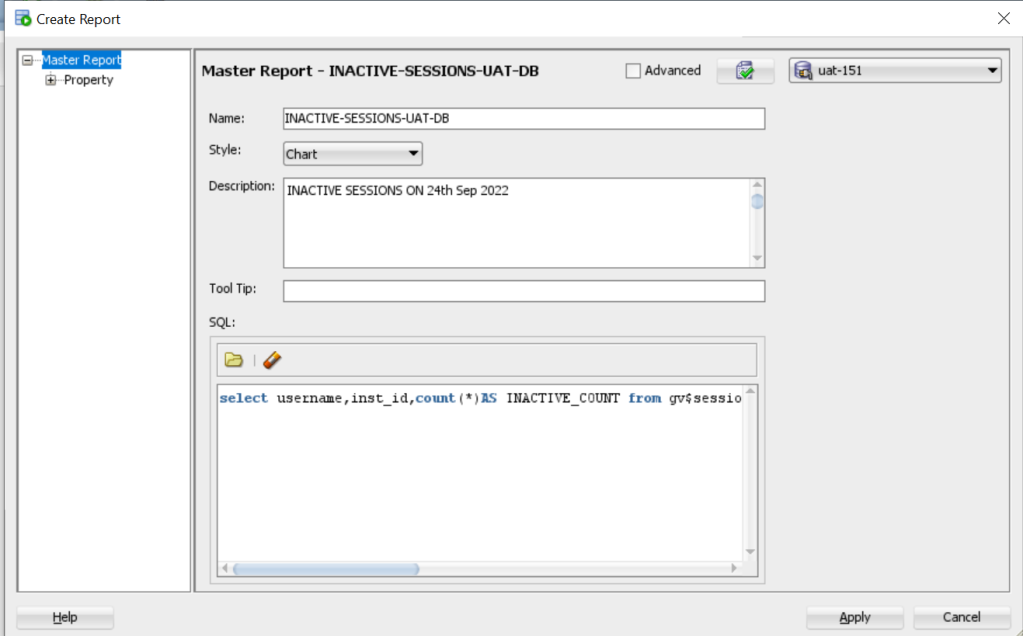

QUERY i used to generate/monitor inactive sessions report –

select username,inst_id,count(*)AS INACTIVE_COUNT from gv$session where status=’INACTIVE’ and username NOT IN (‘SYS’,'(null)’) group by username,inst_id order by 3 desc;

select the query in sql developer and right click on it – choose create-report.

Now, fill the details as shown below, choose db connection name on top right and click the green icon near advanced option to verify expected results. Choose the refresh rate in seconds to see real-time values in form of varying bar lengths.

Once you verify report stats, click on apply. This report is saved as user defined report and you can run it quickly for all saved database connections.

To access user-defined reports, click on view and go to reports –

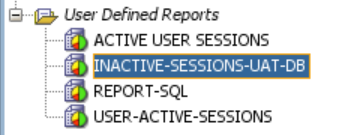

go to user defined reports section –

You can also add title to graph,adjust fonts and bar colors.

To do same, right click on report name and choose edit

Save the report with timestamp in html format –

right click on report name and choose HTML option. specify html file location and click on include timestamp option.

HTML Report snap will generate and saved to local disk as shown below –

You can also name X or Y axis for better understanding –

-

Subscribe

Subscribed

Already have a WordPress.com account? Log in now.